<<back to publications<< …...................>> to Teach0logy.xyz>>

A Map of Operationally Connected Categories as an instrument for classifying physics problems and a basis for developing a universal standard for measuring learning outcomes of students taking physics courses (a novel tool for measuring learning outcomes in physics).

By Valentin Voroshilov

Abstract

Currently there is no tool for measuring learning outcomes of students, which would be broadly accepted by teachers, schools and district officials, by parents, policymakers. Educational standards cannot provide a basis for such a tool, since for an educator “a standard” means a verbal description of skills and knowledge which students should be able to demonstrate but not an actual object, or a feature of an object, accompanied by a specific procedure which allows comparing similar features carried by other objects with the standard one (like in physics). There is however an approach to standardization of measurement of physics knowledge similar to standardization of measurements in physics. This approach is based on a specific technique used for classification physics problems. At the core of such classification is the use of graphs, such that 1. every quantity represented by a vertex/node of a graph must have a numerical representation, i.e. has to be measurable (capable of being measured, i.e. there has to be a procedure leading g to a numerical value of the quantity represented by a vertex/node); and 2. every link between any to vertices must have an operational representation: i.e. for any quantity represented by a vertex, if its value is getting changed, and the values of all but one other quantities represented by other vertices connected to the changing one are being kept constant, the quantity represented by the remaining vertex linked to the changing one must change its value.

(this link: http://www.teachology.xyz/prnes.htm or http://www.teachology.xyz/prnes.pdf opens a presentation delivered at 2016 AAPT-NES meeting)

The main article

“What can’t be measured - can’t be managed”. This sentiment is widely accepted by the members of a business community. In education, however, the discussion of “accountability”, and “measurability” brings many controversial views and opinions on absolutely all levels of education and educational policy making. What should not and what should be measured, when, by whom, what conclusions and decisions should be derived from the results? But the most controversial question is “how”? Currently there is no tool for measuring learning outcomes of students, which would be broadly accepted by teachers, schools and district officials, by parents, policymakers. An open to public, consistent with the taught and learned content, and uniform by the procedure and results tool for measuring learning outcomes does not exist. The situation is like all 50 states had different currency, and there were no exchange rates, hence there was no way to use money from one state in another one, the only way to compare the value of different goods would be barter.

As the consequence, any data on the quality of teaching are usually readily adopted by one “camp” and ignored or even vigorously disputed by another one (for example, read “Boston’s charter schools show striking gains”, The Boston Globe, March 19, 2015 issue).

As a physics teacher I am concerned with having a reliable tool for measuring learning outcomes of students taking physics courses. In educational sciences such a tool is usually called “a standard”. However, the meaning of this term in educational sciences is very different from its original meaning originating from physics.

For an educator “a standard” means a verbal description of skills and knowledge which students should be able to demonstrate, i.e. it is a verbal description of the achievement expectations for students graduating a certain level of education. There is no guaranty, however, that when different assessment developers use the same standard for preparing testing materials (or other assessment tools) the materials would be equivalent to each other.

In physics term “standard” has a very different meaning; a standard is an actual object, or a feature of an object, accompanied by a specific procedure which allows comparing similar features carried by other objects with the standard one (that is why “a standard” is also often called “a prototype”). For example, a standard of mass is an actual cylinder. A verbal description such as: “A standard of mass looks like a cylinder “with diameter and height of about 39 mm, and is made of an alloy of 90 % platinum and 10 % iridium” would not work as a standard, because it is impossible to compare the mass of another object with a sentence.

Over the decades of intensive research, many instruments to measure learning outcomes of students taking physics courses have been proposed, developed, and used as possible standards, including but not limited to: the Force Concept Inventory (Hestenes et al.), the Force and Motion Conceptual Evaluation (Thornton, Sokoloff), the Brief Electricity and Magnetism Assessment (Ding et al.), SAT physics subject test, AP physics exam, MCAT (physics questions). However, all current tools for measuring learning outcomes suffer from at least one, or several deficiencies listed below:

1. the list of concepts depends on the preference of a developer and usually not open to a user and to a public (when using an inventory one can derive an assumption about the concepts analyzing the questions in the inventory, but that is only an assumption, and it also depends on who uses the inventory).

2. The set of questions depends on the preference of a developer.

3. The set of questions is limited and cannot be used to extract gradable information on student’s skills and knowledge.

4. The fundamental principles used for development of a measuring tool are not clear and not open to public, teachers, instructors, professionals.

5. The set of questions becomes available for public examination only after being used, hence it only reflects the view of the developers on what students should know and be able to do, there is no general consensus among the users, instructors, and developers on what should be measured and how to interpret the results.

6. The set of questions change after each examination, which makes virtually impossible to compare the results of students taking different tests (public should just rely on the assertion of the developers that the exams are equivalent, having no evidence of that).

Based on a physics approach to what “a standard” is, I propose that a tool and a procedure used to measure learning outcomes of students taking physics course (or, for that matter, any STEM course) must satisfy the following conditions:

(a) Every aspect of the development and the use of the tool has to be open to public and be able to be examined by anyone.

(b) The use of the tool must lead to gradable information on student’s skills and knowledge.

(c) The use of the tool must lead to gradable information on student’s skills and knowledge, AND must not depend on any specific features of teaching or learning processes.

(d) The use of the tool must lead to gradable information on student’s skills and knowledge, and must not depend on any specific features of teaching or learning processes, AND must allow to compare on a uniform basis the learning outcomes of any and all students using the tool.

(e) Any institution adopting the tool should automatically become an active member of the community utilizing the tool and can propose possible alternations to the tool to accommodate changes in the understanding of what students should know and be able to do.

The latter condition mirrors the history of adaptation of standards in physics, when the community of scientist agreed on the common set of standards and measuring procedures.

We can make a reasonable assumption, that after the initial adoption of the tool by a number of participated collaborators (a school, a college) and demonstration of its accuracy and uniformity, this approach will attract attention of officials of different levels, (school principals, district, city, state officials, staff of philanthropic foundations). The demonstration of such a tool and its applicability would have a profound positive effect on the whole system of education, leading to a better comparability, measurability, accountability without having negative effects which had lead to a resistance of the use of standardized tests. Such a tool (or rather a set of tools developed for all STEM subjects and for all levels of learning) would be adopted voluntarily and gradually by schools and districts forming a community of active co-developers. Members of the community who adopted the tool would be issuing regular updates, keeping it in agreement with the current understating of what should students know and be able to do.

It might seem impossible to develop a tool, which would satisfy all the conditions above. However, in fact, there is a singular example of the use of a tool similar to that: at the time without a solid theoretical foundation, but rather as a practical instrument a similar tool for measuring the level of physics knowledge of prospective students (high school graduates applying to an institute) had been used at Perm Polytechnic Institute (Russia, ~ 1994 - 2003).

There is no common view on how to probe student's understanding of physics. Term “understanding” is being understood differently, has various interpretations and cannot be used as a basis for development of a reliable measuring instrument. However, it is much easier to find a consensus between physics instructors on what type of problems an A student should be able to solve. This idea has been used as a basis for a tool used to measure the level of physics knowledge of prospective students.

In early ninetieths Perm Polytechnic Institute experienced a large amount of high school gradates applying to become a PPI student (the competition to become a PPI undergraduate was fierce). Every applicant had to take physics entrance exam, and administration was in the need of a relative quick, objective and equivalent to all students procedure to measure the level of physics knowledge of the applicants. To answer this need, a set of about 3000 problems had been developed and used to compose an exam constituted of 20 problems with different difficulty level to be offered to high school graduates applied to become PPI undergraduates. Every exam was graded according to the same scale leading to a usable grade distribution. The philosophy behind the approach was simple: it is just impossible to memorize the solutions of all 3000 problems in the book (a book was openly sold via PPI book store), and even if there was the one who could memorize all the solutions, PPI would definitely wanted to have this person as a student.

The methodology for development of the tool was based on a “driving exam” approach, which requires - instead of a verbal description of skills and knowledge a student has to display after taking a course (a.c.a. “educational standards”) – a collection of exercises and actions for which a student has to demonstrate the ability to perform, assuming the full level of learning (a.c.a. “physics standards”).

Currently this methodology is based on the following four principles.

1. In physics every component of student’s physics knowledge or a skill can be probed by offering to a student to solve a specific theoretical or practical problem (to probe rote knowledge a student can also answer a specific question like “what is ...”).

2. For a given level of learning physics there is a set of problems, which can be used to probe student’s knowledge and skills.

3. For a given level of learning physics a set of problems, which can be used to probe student’s knowledge and skills, has a finite number of items.

Hence, it is plausible to assume that to test the level of acquired physics knowledge and skills a collection of problems can be developed which - when solved in full - would demonstrate the top level of achievement in learning physics (an A level of skills and knowledge). A subset of a reasonable size composed from problems selected from the set can be used as a test/exam, which can be offered to a student to collect gradable information on student’s skills and knowledge.

The path to the theoretical foundation of the describe approach had been laid out by the author in 1996 – 1998 (a version of this work has been published in 2015 at http://stacks.iop.org/0031-9120/50/694 ).

This paper represents a short summary and a further advancement of the approach and its application to developing a framework for standardizing knowledge measurement in physics.

4. The fourth principle states that all physics problems can be classified by (a) the set of physics quantities which have to be used to solve a problem; (b) the set of mathematical expressions (which by their nature are either definitions or laws of physics, or derived from them) which have to be used to solve a problem; and (c) the structure of connections between the quantities which defines the sequence of the steps which have to be enacted in order to solve a problem (an example of a general “algorithm” for solving any physics problem is at http://teachology.xyz/general_algorithm.htm).

The latter principle leads to the introduction of the new terminology needed to describe and classify different problems (in physics many similar problems use very different wording). All problems which can be solved by applying the exactly same sets of quantities (a) and expressions (b) and using the same sequence of steps (c) are congruent to each other. Problems which use the same set of quantities (a) and expressions (b) but differ by sequence (c) are analogous problems. Two problems for which set of physics quantities (a) differ by one quantity are similar. Four problems below are offered to illustrate the terminology. Among three problems below problem A is analogous to problem B, and congruent to problem C. Problem D is similar to problems A, B, and C.

Problem A. For a takeoff a plain needs to reach speed of 100 m/s. The engines provide acceleration of 8.33 m/s2. Find the time it takes for the plain to reach the speed.

Problem B. For a takeoff a plain needs to reach speed of 100 m/s. It takes 12 s for the plain to reach this speed. Find acceleration of the plain during its running on the ground.

Problem C. A car starts from rest and reaches the speed of 18 m/s, moving with the constant acceleration of 6 m/s2. Find the time it takes for the car to reach the speed.

Problem D. For a takeoff a plain needs to reach speed of 100 m/s. The engines provide acceleration of 8.33 m/s2. Find the distance it travels before reaching the speed.

It is important to stress, that all congruent problems can be stated using a general language not depending on the actual situation described in a problem. Problem E below is congruent to problems A and C and stated in the most general language not connected to any specific situation.

Problem E. An object starts moving from rest keeping constant acceleration. How much time does it need to reach a given speed?

Based on the fourth principle we can also state that for every problem a unique visual representation can be assign to it, which reflects the general structure of the connections within the problem. This visual representation is a graph (an entity of a graph theory): each node (vertex) of the graph represents a physical quantity without the explicit use of which a problem cannot be solved; each edge of the graph represents the presence of a specific equation which includes both quantities connected by the edge (link). Each graph represents a specific example of a knowledge mapping, but has to obey to two strict conditions (which makes each graph unique and this approach novel):

1. every quantity represented by a vertex/node of a graph must have a numerical representation, i.e. has to be measurable (capable of being measured, i.e. there has to be a procedure leading g to a numerical value of the quantity represented by a vertex/node).

2. every link (edge) between any to vertices must have an operational representation: i.e. for any quantity represented by a vertex, if its value is getting changed, and the values of all but one other quantities represented by other vertices connected to the changing one are being kept constant, the quantity represented by the remaining vertex linked to the changing one must change its value.

Graphs, which satisfy the above two conditions, represent a novel visual technique for representing a specific scientific content, called “a map of operationally connected categories” or MOCC. Knowledge maps usually represent the thought process of an expert in a field solving a particular problem (analyzing a particular situation). MOCCs represent objective connections between physical quantities, which imposed by the laws of nature.

The second condition eliminates possible indirect connections/links (otherwise the structure of a graph would not be fixed by the structure of the problem, but would depend on the preferences of a person drawing the MOCC). However, even in this cases there is a room for a discussion: should the links represent only definitions and fundamental laws or also expressions derived from them? This question should be answered during the trial use of the developed tool.

Expanding the language introduced above, we define that all problems which MOOCs include exactly same set of quantities (a) are called like problems (they compose a set of like problems). A problem which is stated in a general language and which is like to all problems in a set of like problems is the root problem of the set; any specific problem in a set can be seen as a variation of the root problem. A set of root problems can be used to describe desired level of learning outcomes of students.

Members of the community using the tool for measuring learning outcomes of students taking physics course would agree on the set of root problems used as the basis for the tool. Then each member could use its own set of specific problems congruent to the set of root problems. This approach would ensure the complete equivalency of the results when measuring learning outcomes.

One of the consequences of the graphical representation of a problem is the ability to assign to it an objective numerical indicator of its difficulty:

D = NV + NE, which is equal to the sum of the number of vertices included in MOCC, NV and the number of unique equations represented by the links of in MOCC, NE. This indicator can be used for ranking problems by their difficulty when composing a specific test to be offered to a student. This indicator of difficulty does not depend on a perception of a person composing a test and provides uniformity in composing tests (this is the simplest objective indicator of difficulty of a physics problem which is based on the topology of MOCC corresponded to a problem).

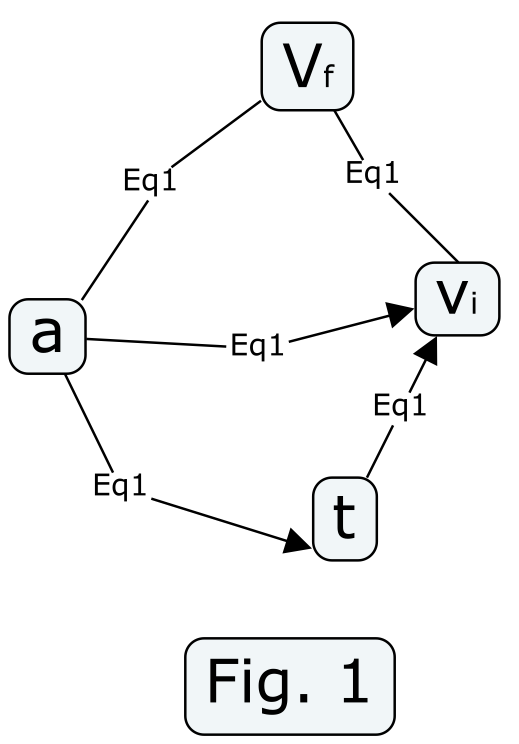

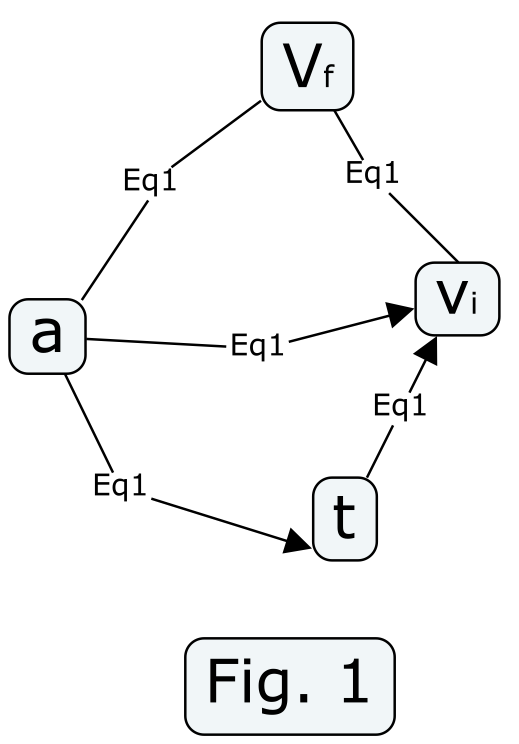

For example: every specific problem congruent to problem E corresponds to MOCC in Fig. 1 with difficulty D = 5 (four quantities and one equation which relates them).

Each link of the graph on the

right represents the same connection/equation:

.

.

(the MOCC was drawn using CmapTools: http://cmap.ihmc.us/)

Nowadays MOCCs represent the most accurate instrument for classification of physics problems and developing a tool for objective measuring learning outcomes of students taking physics courses. The same principle also can be used for developing an analogues tool for measuring learning outcomes of student taking any STEM course.

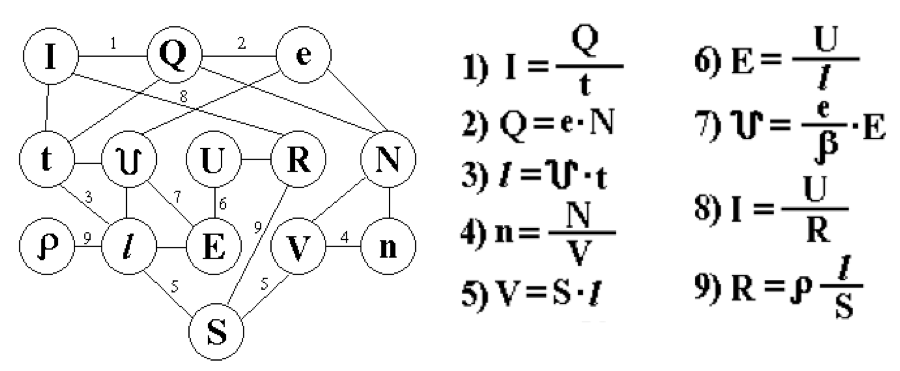

It is worth to note of the use of MOCCs as a pedagogical instrument. The visualization of logical connections between quantities helps students to grasp the logic of creating of the solution of a given problem. Some students develop a habit of using MOCC when study physics. MOCC also can be used as an end of a topic assignment, when students should use it to visualize all the important connections they have learned. Below is an example of such a MOCC developed by a high school student after topic “Direct Electric Current”. On the pedagogical use of MOCCs and other learning aids, visit http://teachology.xyz/la.htm.

To

summarize the main idea:

All like problems have a MOCC with the same vertices but different links used to solve a problem. Each MOCC describes a set of like problems. Each like problem (in general – any problem) can be stated in a general (i.e. abstract) language which does not depend on the specific physical situation describe in the problem. A problem which is stated in a general language and which is like to all problems in a set of like problems is the root problem of the set; any specific problem in a set can be seen as a variation of the root problem.

A set of root problems can be used to describe desired level of learning outcomes of students.

I believe the time has come to create a coalition of individuals and institutions who would see the development of objective and universal tools for measuring learning outcomes in physics as an achievable goal.

The first step would be agreeing on the set of root problems and classification them based on the difficulty. We need a group of people who would collected all the problems from all available textbooks and routinely converted each problem into a root problem, draw a MOCC for each problem, calculated its difficulty.

All individual MOCCs combined together would represent the map of objective connections in physics as a field of natural science. This map could have been used to teach AI to solve physics problems in a way it uses geographical maps to “solve driving problems” (in addition with other learning tools this AI could replace eventually an average physics teacher). But for us MOCCs represent the most accurate instrument for classification of physics problems and the nucleus of the tool for measuring learning outcomes of students objectively and uniformly (i.e. comparably).

The same principle also can be used for developing an analogues tool for measuring learning outcomes of student taking any STEM course.

References

Thornton R.K., Sokoloff D.R. (1998) Assessing student learning of Newton's laws: The Force and Motion Conceptual Evaluation and Evaluation of Active Learning Laboratory and Lecture Curricula. . Amer J Physics 66: 338-352.

Hallouin I. A., & Hestenes D. Common sense concepts about motion (1985). American Journal of Physics, 53, 1043-1055

Hestenes D., Wells M., Swackhamer G. 1992 Force concept inventory. The Physics Teacher 30: 141-166.

Hestenes D., 1998. Am. J. Phys. 66:465

Ding L., Chabay R., Sherwood B., & Beichner R. (2006). Evaluating an electricity and magnetism assessment tool: Brief Electricity and Magnetism Assessment (BEMA). Phys. Rev. ST Physics Ed. Research 2

“Learning aides for students taking physics”, Phys. Educ. 50 (2015) 694-698, http://stacks.iop.org/0031-9120/50/694 (October, 2015)

Voroshilov V., “Universal Algorithm for Solving School Problems in Physics” // in the book "Problems in Applied Mathematics and Mechanics". - Perm, Russia, 1998. - p. 57.

Voroshilov V., “Application of Operationally-Interconnect Categories for Diagnosing the Level of Students' Understanding of Physics” // in the book “Artificial Intelligence in Education”, part 1. - Kazan, Russia, 1996. - p. 56.

Voroshilov V., “Quantitative Measures of the Learning Difficulty of Physics Problems” // in the book “Problems of Education, Scientific and Technical Development and Economy of Ural Region”. - Berezniki, Russia, 1996. - p. 85.

<<back to publications<< …................... >> to Teach0logy.xyz>>